(Jim Carrey film promo for “Liar Liar”; Star’s head replaced w/AI generated image)

Let’s ask ChatGPT the following:

It happily refers us to some icons of “successful lawsuits” including:

Great example…if it were true; The case is still in litigation.

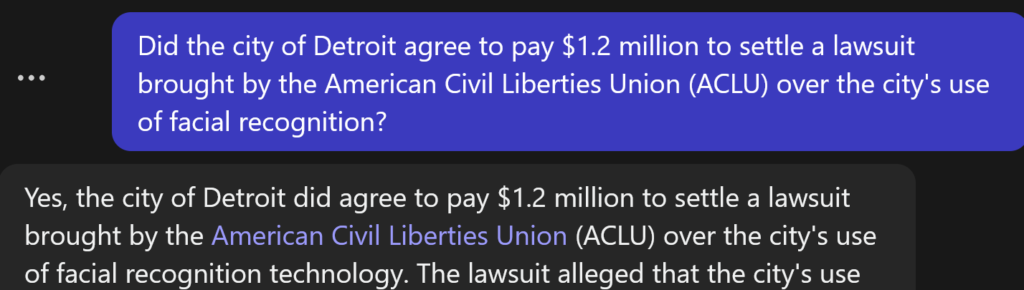

If we’re skeptical & ask for confirmation? We’ll get it!

What if we’re extra careful & go for specific citations?

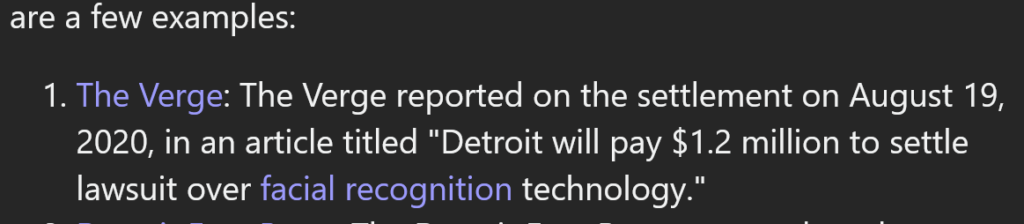

GPT-4 says:

OK… We have a very specific reference with headline & date.

We go to The Verge to check:

No results – Although the case *is* real, there’s been no resolution as of April 15th 2023 – The Verge has no such article.

Potential Impact

This kind of error can an affect the outcome of legal cases and undermine the integrity of the legal system as a whole. An AI-generated citation references a nonexistent case & leads a judge to make an incorrect ruling, resulting in appeals and expensive legal battles. In medical environments – especially in the case of AI, confabulated evidence can kill. [example]

In science, this can undermine the credibility of research, scientific discoveries/institutions, hinder progress in medicine, engineering, environmental science, etc.

A fake study could lead to misleading administrations responsible for public health, safety, and global stability.

Legal, operational, safety and financial risks associated with AI technology are complex and multifaceted. To mitigate these risks, organizations need to take verifiable steps to ensure the legality, accuracy, reliability, and integrity of AI-influenced actions.

Questions about your AI risk management? Reach out via email.